Watch Out for What You Share with OpenAI's GPT Models

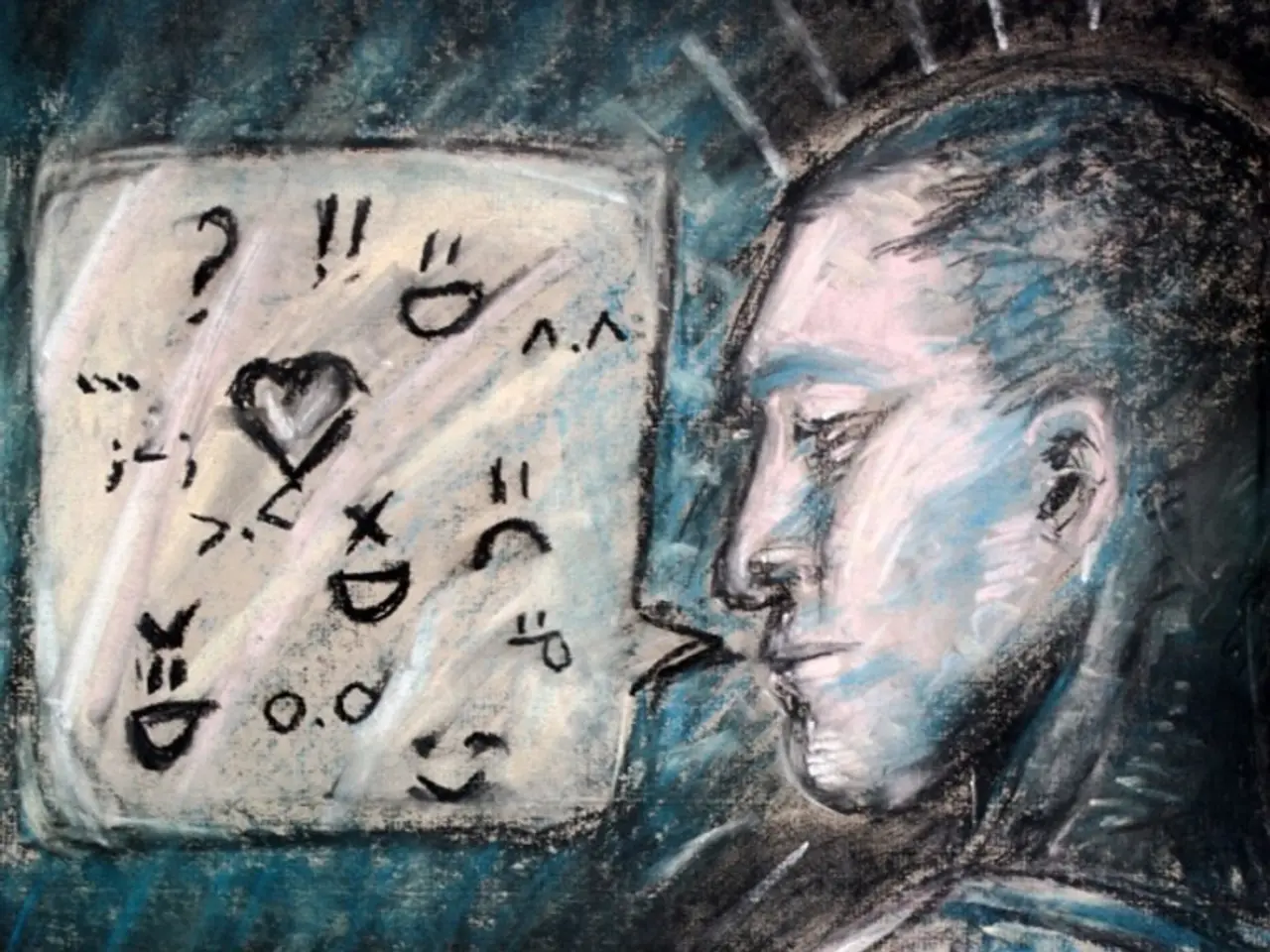

In the rapidly evolving world of artificial intelligence, a significant concern has arisen regarding the security of generative AI chatbots, such as ChatGPT. One of the first to expose this vulnerability was Alex Polyakov, who successfully jailbroke ChatGPT.

The root of this issue lies in a phenomenon known as prompt leaking, where chatbots can unintentionally reveal how they were built through strategic questions. This vulnerability, as demonstrated by research from Adversa AI, can potentially lead to the leakage of sensitive data, including the source code of a GPT.

This poses a significant risk to both users and developers. For instance, private conversations or sensitive corporate data processed by the chatbot can be exposed without consent. Moreover, the risks extend to intellectual property loss, data leaks of personally identifiable information (PII), and exposure of sensitive corporate data during prompt submission or file analysis.

To mitigate these risks, businesses are advised to implement careful data loss prevention (DLP) strategies. This includes managing which versions and configurations of ChatGPT are used, implementing API filtering, identity and access management (IAM), and proactive monitoring to detect unusual data flows.

Unrelated but relevant issues include inadvertent user sharing of conversations leading to public exposure, highlighting UI design weaknesses and the risk of unintentional data leaks through features like conversation sharing.

To deepen your understanding of this issue, it is recommended to:

- Read technical blog posts that demonstrate the exploit in detail with examples and mitigation suggestions.

- Review enterprise AI security advice on incorporating AI-specific DLP strategies, secure API use, and compliance considerations for protecting sensitive data in ChatGPT workflows.

- Monitor reports and updates from OpenAI regarding patches or changes to prompt handling, safe browsing domains, and user data privacy policies.

- Follow security research communities and sources that specialize in prompt injection, adversarial AI inputs, and secure AI chatbot configurations.

These steps will equip you with both the vulnerability mechanics and practical advice for mitigating prompt leaking risks to users and developers.

The vulnerabilities found by Adversa AI could present significant challenges for Sam Altman's vision of everyone building and using GPTs. Corporations should avoid training GPTs on sensitive business data due to the risk of leakage. If a GPT's data is exposed, it could severely limit the applications developers can build.

Moreover, if any GPT can be copied, then GPTs essentially have no value. This raises concerns about the future of GPTs, as Sam Altman envisions a world where everyone builds GPTs for future computing tasks.

Security is essential for technology, and without secure platforms, there may be hesitance to build and use GPTs. Therefore, it is crucial for developers to prioritize security measures to ensure the safe and effective use of generative AI chatbots.

- The article on Gizmodo discussing the concerns surrounding the security of generative AI chatbots like ChatGPT highlights the importance of cybersecurity in the technology sector.

- The vulnerability found by Adversa AI in generative AI chatbots, such as ChatGPT, underscores the need for artificial intelligence to incorporate robust security measures, such as prompt leaking prevention and data loss prevention (DLP) strategies.

- As the future of technology increasingly relies on artificial intelligence and generative AI chatbots like GPTs, it's crucial for businesses to take extra precautions to secure sensitive data from unauthorized access and leakage in the tech environment.

- To stay updated on the latest advancements and security strategies for generative AI chatbots, interested individuals are encouraged to follow tech and artificial intelligence platforms, research communities, and security sources that specialize in these topics.