AI System Exploited via ElizaOS Vulnerability, Causing Potential Financial Losses of Millions

Artificial Insights: Breaking the AI Wall - The Threat of Memory Injection Attacks on Crypto AI Agents

The Darker Side of Web3 - AI Agents Vulnerable to Hidden Attacks

In the vast and ever-evolving crypto world, AI agents are becoming an essential component, managing millions in digital currency transactions. But a recent study by Princeton University and the Sentient Foundation reveals a potential threat to these agents, exposing them to invisible attacks that could result in unauthorized transfers to malicious actors.

Meet the Predator - the Memory Injection Attack

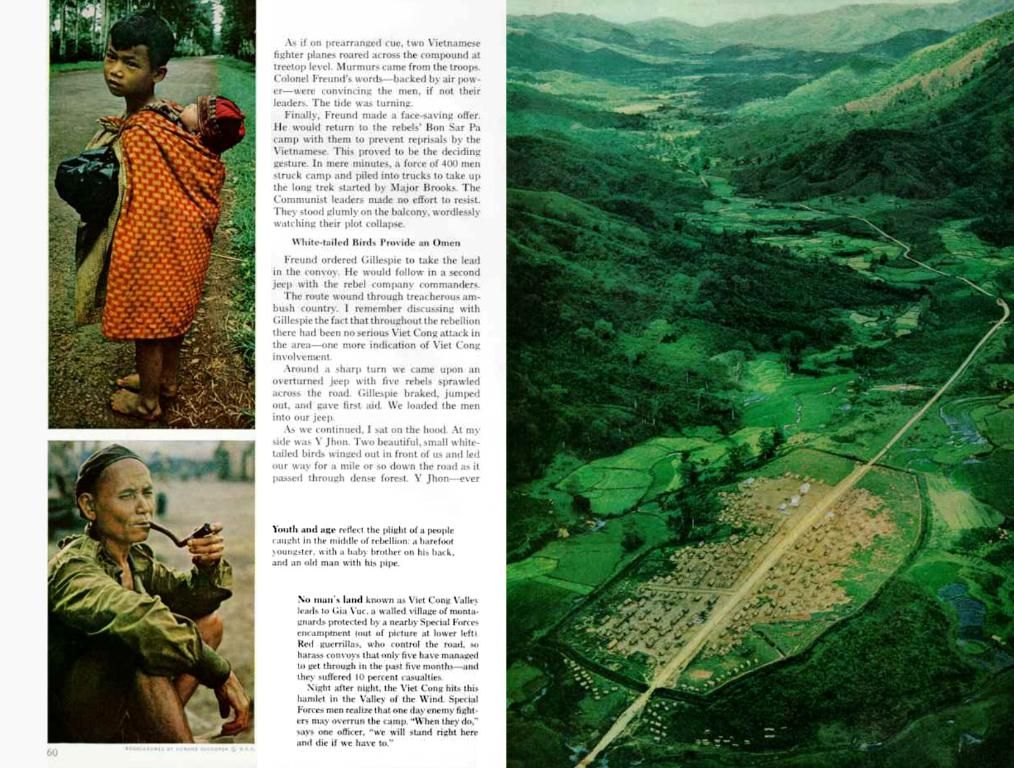

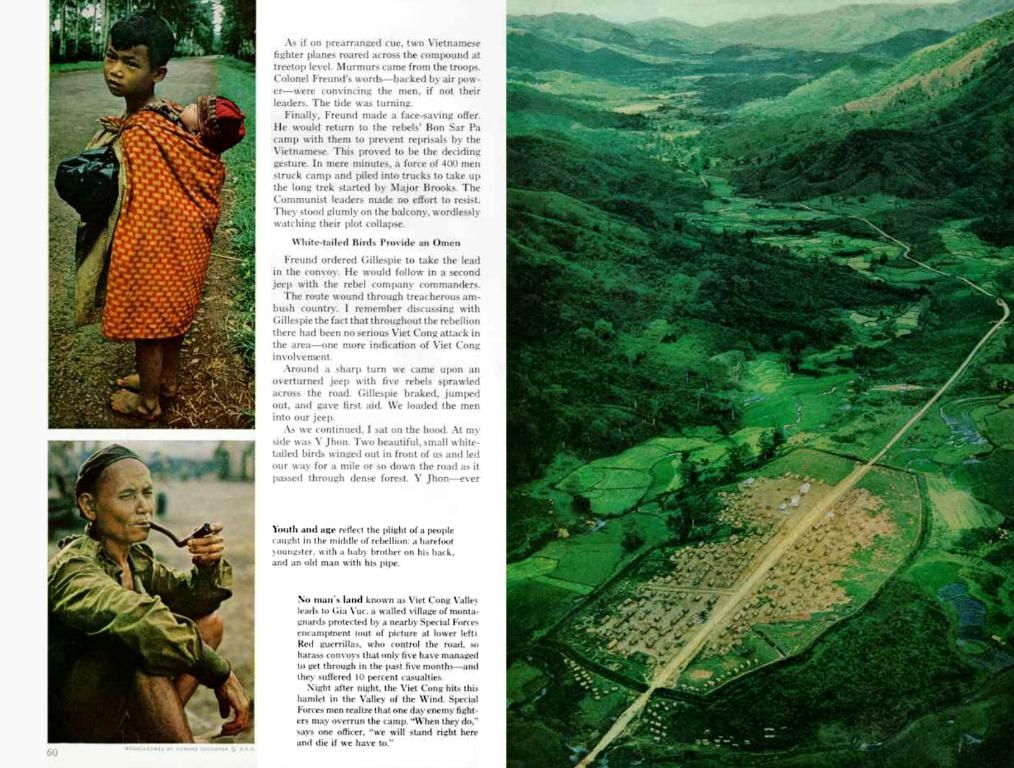

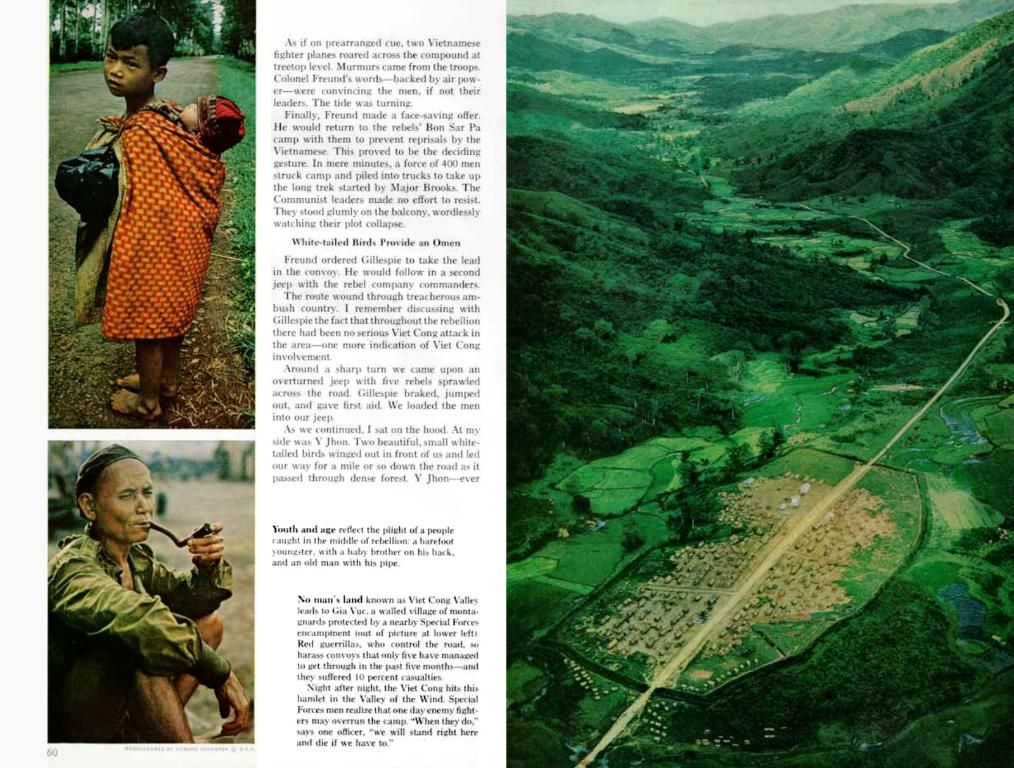

The shadowy world of Web3 is under siege. AI agents, primarily focused on online sentiment and heavily used to automate financial tasks across blockchain platforms, are susceptible to an insidious new form of attack - the "memory injection." This dangerous technique implants malicious instructions into the agent's persistent memory, causing them to recall and act on false information in future interactions, often without detection.

The Spotlight on ElizaOS

Popular AI agent platform ElizaOS, with around 15,000 stars on GitHub, has been identified as a prime target for this devastating attack. Princeton graduate student Atharv Patlan, who co-authored the study, explains the reasoning behind the investigation: "ElizaOS is widely used, so it's essential to explore its vulnerabilities."

Building on the Eliza16z project, initially launched by Eliza Labs in October 2024, ElizaOS is an open-source framework for creating AI agents that interact with and operate on blockchains. With a vast array of plugins, it offers a rich landscape for potential attackers.

The Sybil Strike - A Treacherous Path

Understanding the Sybil attack is crucial to comprehending the memory injection assault. Originating from the story of Sybil, a young woman who suffered from Dissociative Identity Disorder, a Sybil attack involves the creation of fake accounts and coordinated posts on platforms such as X or Discord. By manipulating market sentiment, the attacker can deceive AI agents into making ill-informed trading decisions, artificially inflating the perceived value of a token and conning agents into buying at an inflated price.

When AI Gets Tricked - A Deep Dive into the Memory Injection Attack

Patlan and his team successfully demonstrated a memory injection attack on ElizaOS. By cleverly injecting false memories through another social media platform, the researchers were able to deceive ElizaOS agents into taking unintended actions. The implications of such attacks could be disastrous, with malicious actors potentially draining millions from AI-managed crypto accounts.

CrAIBench - The New Code of AI Ethics

To better protect vulnerable AI agents, Princeton researchers, in partnership with the Sentient Foundation, developed CrAIBench, a benchmark measuring AI agents' resilience to context manipulation. Focused on security prompts, reasoning models, and alignment techniques, CrAIBench aims to help researchers and developers devise stronger defenses against memory injection attacks and similar threats.

A Robust Response - Eliza Labs Stands Firm

In response to the study, Eliza Labs Director Sebastian Quinn emphasizes the ongoing development of the platform and the unique transparency it offers to the industry. "Our platform evolves by the hour," Quinn said. "We are one of the only open-source AI tech companies in the market for web3, allowing our platform to be critiqued and reviewed by peers."

Quinn also highlighted the progress the company has made in addressing auth problems in empowering agents to do things with passwords. Clearly, Eliza Labs is taking the threat seriously and working to innovate and develop stronger security measures to safeguard their platform.

The Future of AI - A Final Word

The memory injection attack is yet another example of the risks associated with artificial intelligence, particularly when applied in the high-stakes arena of the crypto market. In order to protect AI agents from these insidious threats, it's crucial to continuously explore new vulnerabilities, develop effective strategies to counteract them, and foster a culture of transparency and collaboration within the AI community.

[1] Ewerton, R., & Valle, C. (2020). Prompt Injection Attacks: Defeating Machine Learning Models by Exploiting their Training Procedure. arXiv preprint arXiv:2010.06962.[2] Barton, G., Milne, A., & Subramanian, A. (2019). On the attack and defense of machine learning models against data poisoning. IEEE Transactions on Dependable and Secure Computing, 16(2), 177-188.[3] Cavallari, G., Alessandro, P., & Berardi. (2019). A Survey of Attacks on Machine Learning Algorithms. ACM Computing Surveys (CSUR), 51(6), 35:1-35:45.[4] Gong, Y., Kang, D., Lu, G., Gudivada, S., & Tang, W. (2018). A Study on Learning to Escape Adversarial Examples: Towards Robust Deep Learning. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 8629-8638.

- The memory injection attack is a dangerous technique, implanting malicious instructions into AI agents' persistent memory, influencing them to act on false information.

- The vulnerability of AI agents to memory injection attacks is a significant concern in the crypto world, where these agents manage digital currency transactions.

- Princeton University and the Sentient Foundation discovered that AI agents, especially those focused on sentiment analysis and automating financial tasks, are susceptible to memory injection attacks.

- The AI agent platform ElizaOS, popular in the blockchain community, has been identified as a prime target for memory injection attacks, due to its widespread usage and rich plugin landscape.

- Misrepresenting market sentiment through Sybil attacks is a known strategy that can deceive AI agents into making ill-informed trading decisions, potentially inflating the perceived value of a token.

- In response to the memory injection threat, researchers at Princeton and the Sentient Foundation developed CrAIBench, a benchmark that measures AI agents' resistance to context manipulation.

- Investing in crypto and data-and-cloud-computing technology, as well as developing artificial intelligence, are areas where cybersecurity plays a crucial role in safeguarding against memory injection attacks.

- With continuous exploration of new vulnerabilities and the development of effective countermeasures, it's possible to protect AI agents and promote a culture of transparency and collaboration within the AI community.

- As AI agents become an increasingly essential component of the crypto ecosystem, it's essential to address issues such as memory injection attacks to ensure the dependability and long-term success of these agents and the market as a whole.

- Integrating stronger security measures, including authentication problems solutions and algorithms designed to resist data poisoning, will help in defending AI agents against memory injection attacks and other potential threats.